Multiple Regression

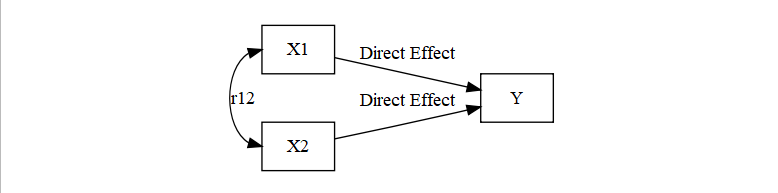

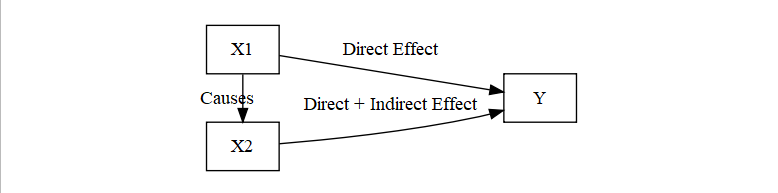

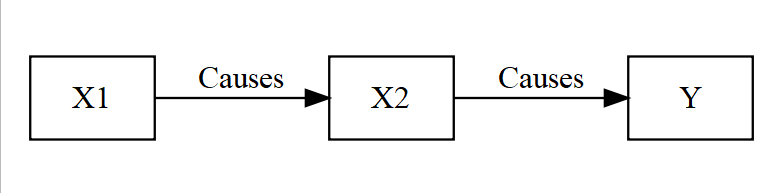

Causal Modeling

- X causes Y. Eating too many cookies will make you gain weight

- We need to make sure that the cause is not spurious.

- Causal Path analysis

- Direct and Indirect Effects

- Direct: X1 -> Y (which can control for X2)

- Indirect X1 -> X2 -> Y

- Direct and Indirect Effects

- Causal Path analysis

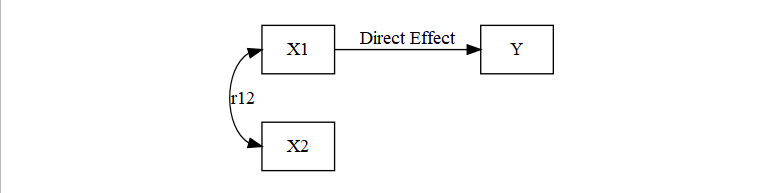

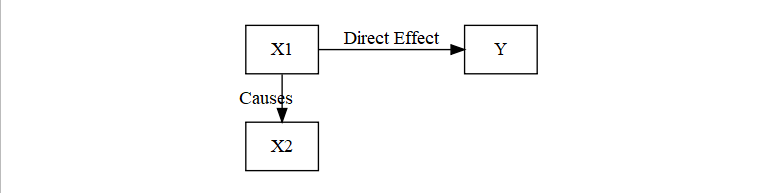

Partial Redundancy (most common issue)

- There are a few possibilities

- Both X1 and X2 are causing Y

X1 and X2 are causing Y, but X1 is causing X2 thus making X2 indirect as well

Both occur when: \(r_{Y1} > r_{Y2}r_{12}\) & \(r_{Y2} > r_{Y1}r_{12}\)

Hard to know whether you have the Model A or B

Back to our last weeks example

- Practice Time (X1)

- Performance Anxiety (X2)

- Memory Errors (Y)

| Variable | Practice Time (X1) | Performance Anxiety (X2) | Memory Errors (Y) |

|---|---|---|---|

| Practice Time (X1) | 1 | .3 | .6 |

| Performance Anxiety (X2) | .3 | 1 | .4 |

| Memory Errors (Y) | .6 | .4 | 1 |

- Model we used was Memory (Y) ~ Slope Practice(X1) + Slope Anxiety (X2) + Intercept

library(MASS) #create data

py1 =.6 #Cor between X1 (Practice Time) and Memory Errors

py2 =.4 #Cor between X2 (Performance Anxiety) and Memory Errors

p12= .3 #Cor between X1 (Practice Time) and X2 (Performance Anxiety)

Means.X1X2Y<- c(10,10,10) #set the means of X and Y variables

CovMatrix.X1X2Y <- matrix(c(1,p12,py1,

p12,1,py2,

py1,py2,1),3,3) # creates the covariate matrix

set.seed(42)

CorrDataT<-mvrnorm(n=100, mu=Means.X1X2Y,Sigma=CovMatrix.X1X2Y, empirical=TRUE)

#Convert them to a "Data.Frame" & add our labels to the vectors we created

CorrDataT<-as.data.frame(CorrDataT)

colnames(CorrDataT) <- c("Practice","Anxiety","Memory")- Use the stargazer package to see the models side by side

- Change latex below to text when you want to view the results right in R

library(stargazer)

###############Model 1

Model.1<-lm(Memory~ Practice, data = CorrDataT)

###############Model 2

Model.2<-lm(Memory~ Practice+Anxiety, data = CorrDataT)

stargazer(Model.1,Model.2,type="latex",

intercept.bottom = FALSE, single.row=TRUE,

star.cutoffs=c(.05,.01,.001), notes.append = FALSE,

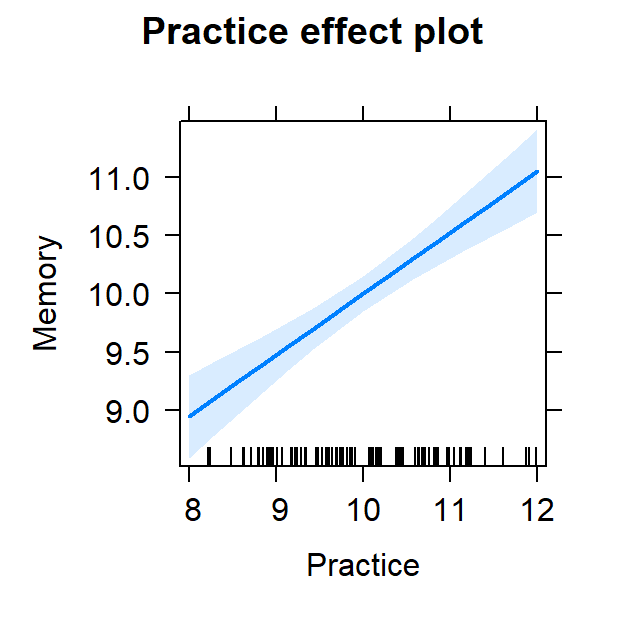

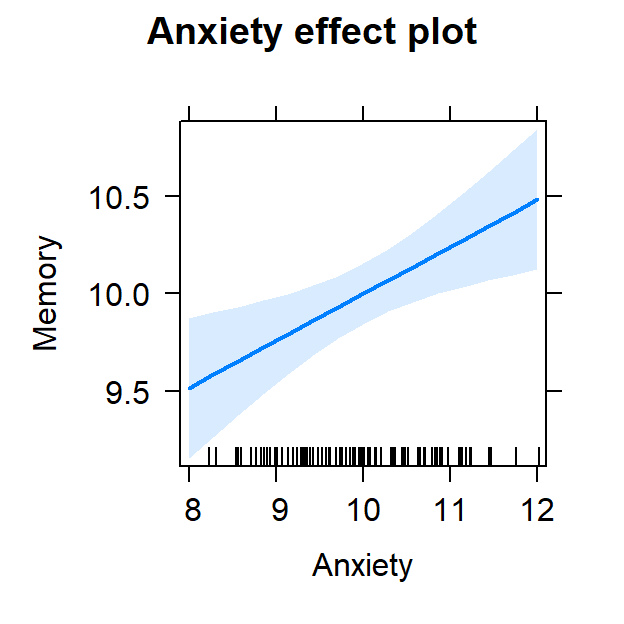

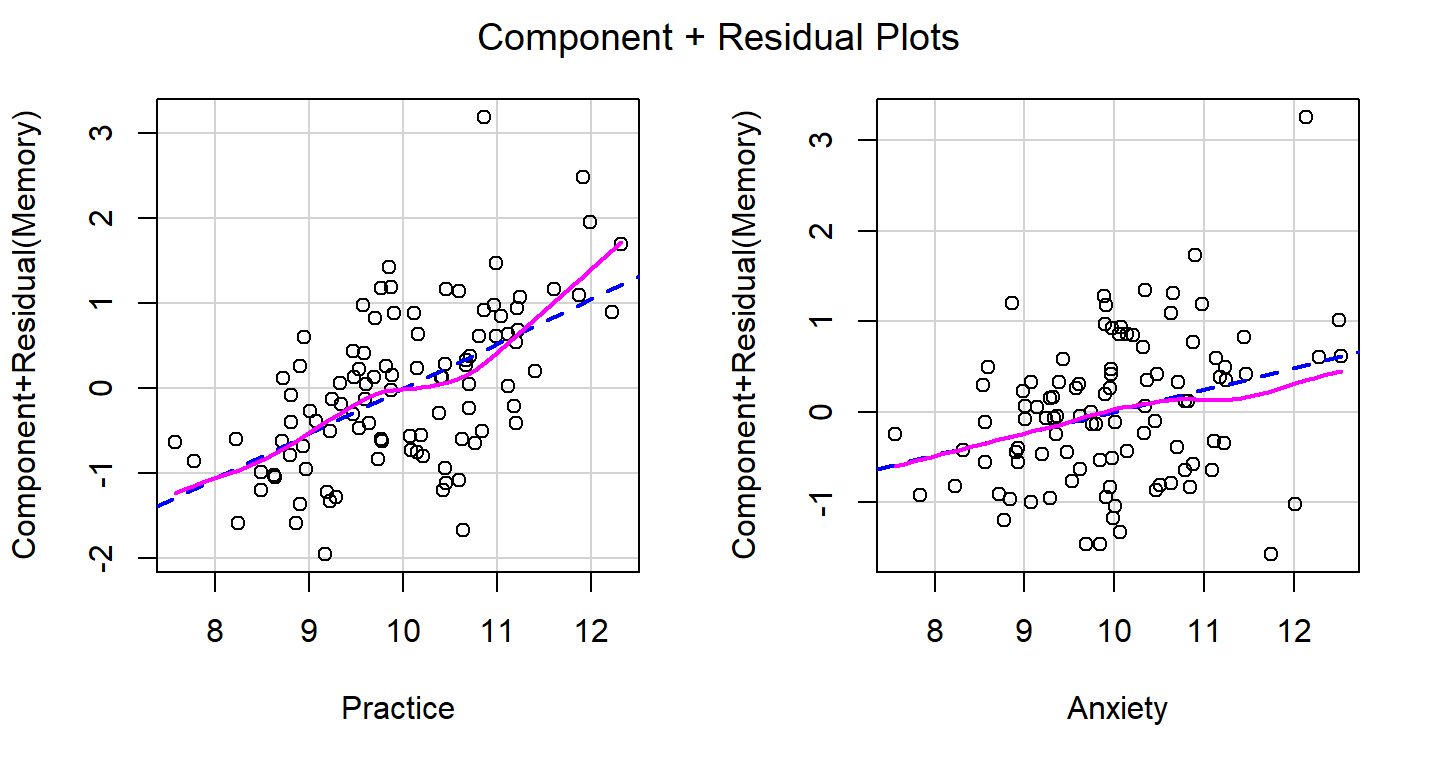

header=FALSE)Plots

- Now piloting it a bit more complex as we have two predictors

- Simple scatter plots are not semi-partialed

- Also while we are not interacting the two variables, each has an effect on the DV

- We must visualize both effects

- There are different suggestions on how to do this [we will come back to differences in plotting later]

library(effects)

#plot individual effects

Model.2.Plot <- allEffects(Model.2,

xlevels=list(Practice=seq(8, 12, 1),

Anxiety=seq(8, 12, 1)))

plot(Model.2.Plot, 'Practice', ylab="Memory")

plot(Model.2.Plot, 'Anxiety', ylab="Memory")

Fully Indrect

- Not spurious, but X1 is not direct to Y (X2 is intervening completely)

Full Redundancy (Spurious models)

- Happens when, \(r_{Y2} \approx r_{Y1}r_{12}\)

- X1 is confounded in X2

- X1 and X2 are redundant

Example of Full Redundancy

I.py1 =.6 #Cor between X1 (Working Memory Digit Span) and Memory Errors

I.py2 =.6 #Cor between X2 (Auditory Working Memory) and Memory Errors

I.p12 =.9999 #Cor between X1 Working Memory and Auditory Working Memory

I.Means.X1X2Y<- c(10,10,10) #set the means of X and Y variables

I.CovMatrix.X1X2Y <- matrix(c(1,I.p12,I.py1,

I.p12,1,I.py2,

I.py1,I.py2,1),3,3) # creates the covariate matrix

set.seed(42)

IData<-mvrnorm(n=100, mu=I.Means.X1X2Y,Sigma=I.CovMatrix.X1X2Y, empirical=TRUE)

IData<-as.data.frame(IData)

#lets add our labels to the vectors we created

colnames(IData) <- c("WM","AWM","Errors")###############Model 1

Spurious.1<-lm(Errors~ WM, data = IData)

###############Model 2

Spurious.2<-lm(Errors~ AWM, data = IData)

###############Model 3

Spurious.3<-lm(Errors~ WM+AWM, data = IData)

stargazer(Spurious.1,Spurious.2,Spurious.3,type="latex",

intercept.bottom = FALSE, single.row=TRUE,

star.cutoffs=c(.05,.01,.001), notes.append = FALSE,

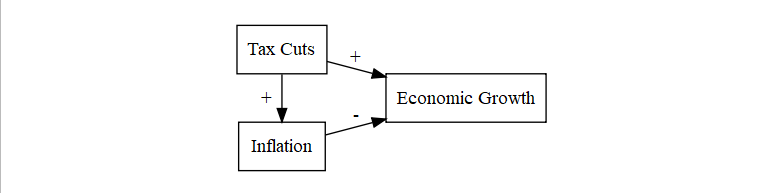

header=FALSE)Suppression

- Suppression is a strange case where the IV -> DV relationship is hidden (suppressed) by another variable

- Tax cuts cause growth, tax cuts cause inflation. Tax cuts alone might look +, but add in inflation and now tax cuts could make cause the growth to look different

- Or the the suppressor variable can cause a flip in the sign of the relationship

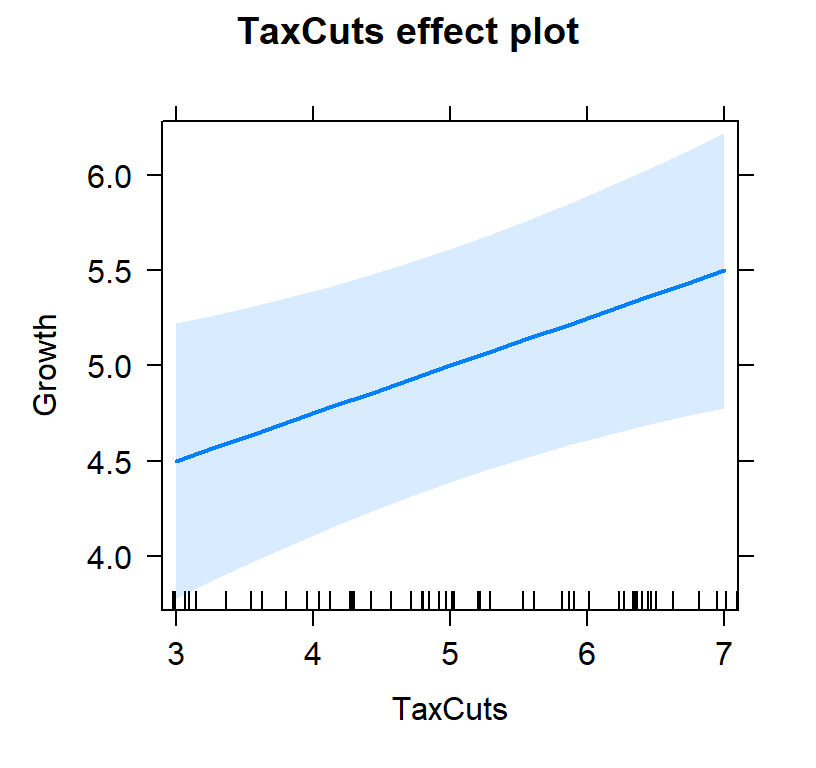

Plot predictions

- Also how you plot depends on your theory/experiment/story

- We need to control for inflation (or view tax slope at different levels of inflation)

- First lets view the tax slope without controlling for inflation

#plot individual effects

Tax.Model.Plot <- allEffects(TaxCutsOnly,

xlevels=list(TaxCuts=seq(3, 7, 1)))

plot(Tax.Model.Plot, 'TaxCuts', ylab="Growth")

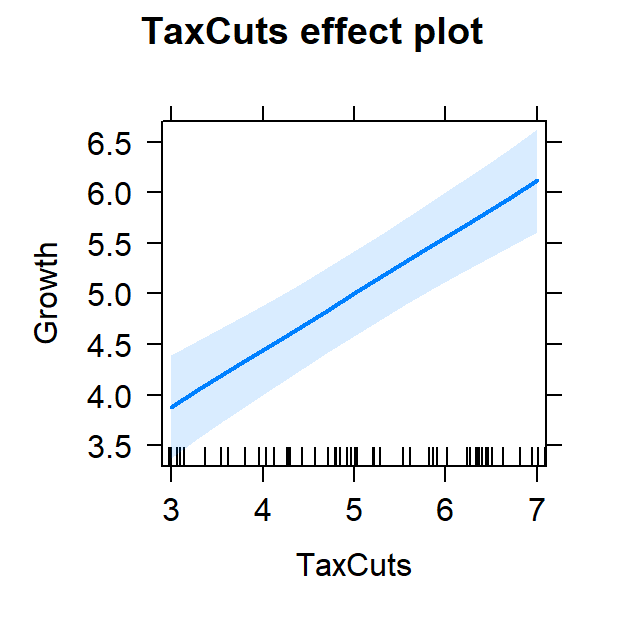

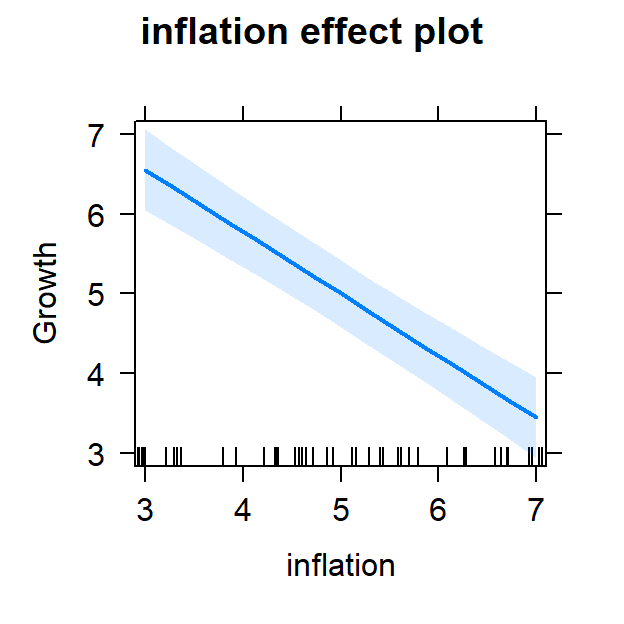

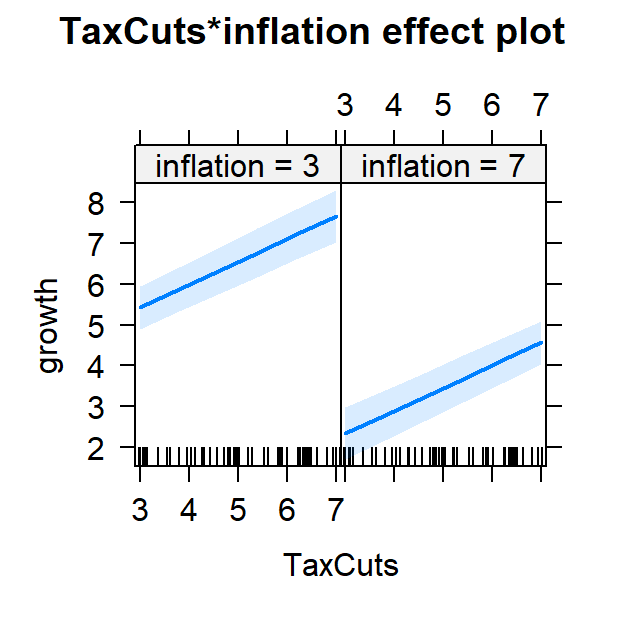

- Now lets view it controlling for inflation

#plot individual effects

Full.Model.Plot <- allEffects(Full.Model,

xlevels=list(TaxCuts=seq(3, 7, 1),

inflation=seq(3, 7, 1)))

plot(Full.Model.Plot, 'TaxCuts', ylab="Growth")

plot(Full.Model.Plot, 'inflation', ylab="Growth")

#plot both effects

Full.Model.Plot2<-Effect(c("TaxCuts", "inflation"), Full.Model,

xlevels=list(TaxCuts=c(3, 7),

inflation=c(3,7)))

plot(Full.Model.Plot2)

Estimating population R-squared

- \(\rho^2\) is estimated by multiple \(R^2\)

- Problem: in small samples correlations are unstable (and inflated)

- Also, as we add predictors, \(R^2\) gets inflated

- So we use an adjusted multiple \(R^2\)

- Adjusted \(R^2 = 1 - 1- R_Y^2 \frac{n-1}{n-k-1}\)

Multiple Linear Regression Assumptions

- Multicollinearity: Predictors cannot be fully (or nearly fully) redundant [check the correlations between predictors]

- Homoscedasticity of residuals to fitted values

- Normal distribution of residuals

- Absence of outliers

- Ratio of cases to predictors

- Number of cases must exceed the number of predictors

- Barely acceptable minimum: 5 cases per predictor

- Preferred minimum: 20-30 cases per predictor

- Linearity of prediction

- Independence (no auto-correlated errors)

How to check assumptions?

- First lets fit the model

Linear.Model<-lm(Memory~ Practice+Anxiety, data = CorrDataT)Lets test for Multicollinearity

- Multicollinearity can be detected when the variance on the terms you are interested in become inflated

- So we need to test the variance on the factors.

- Basic Logical step 1) get Variance on predictor (only that term in model) [Vmin]

- Basic Logical step 2) get Variance (Vmax) on predictor (all other terms in model) [Vmax]

- Basic Logical step 3) ratio: Vmax/Vmin. if the value > 4 you have a problem

- that’s alot of steps. R can do it for you basically with one line of code

- Variance inflation factors (vif)

library(car)

vif(Linear.Model) # variance inflation factors ## Practice Anxiety

## 1.098901 1.098901vif(Linear.Model) > 4 # problem?## Practice Anxiety

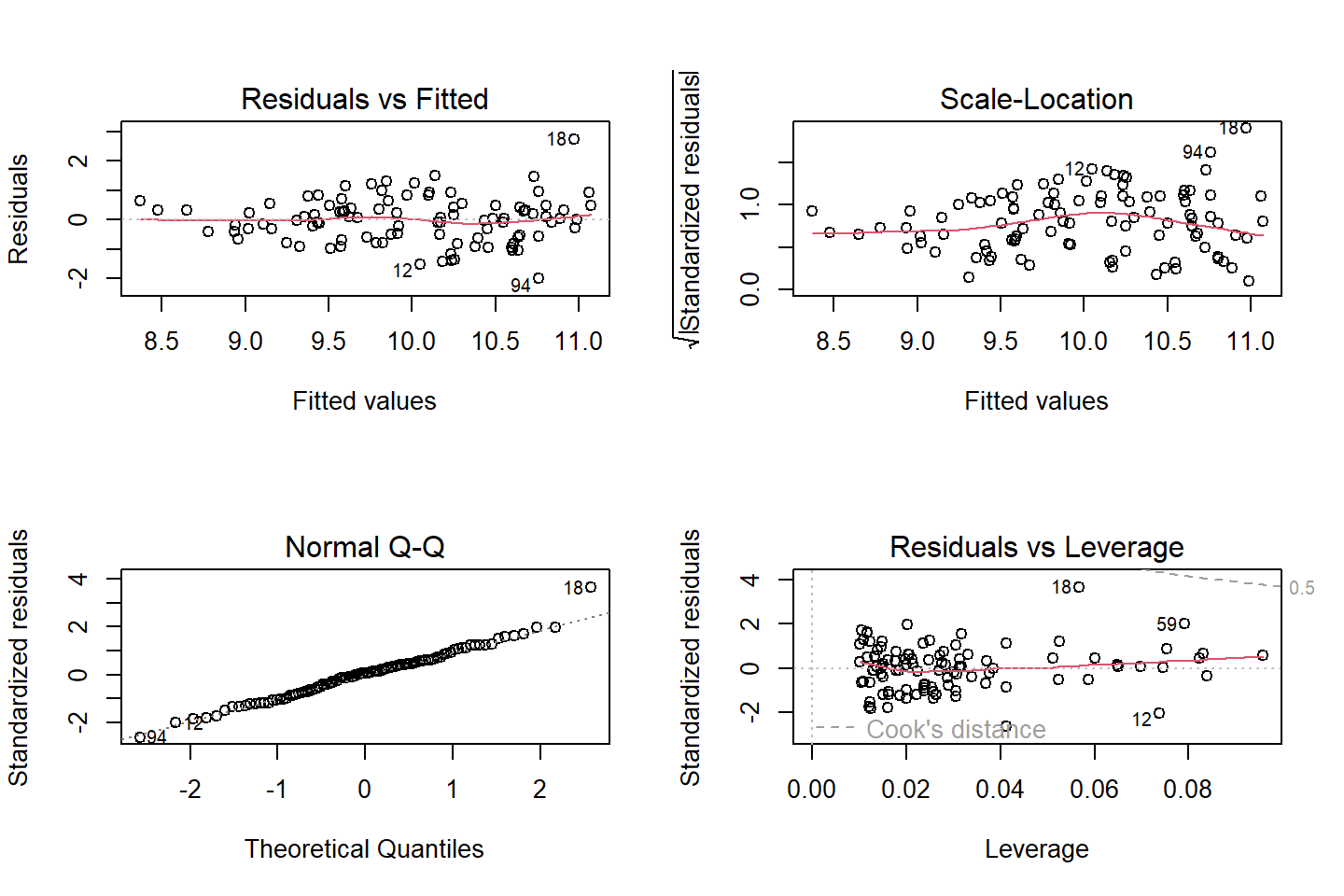

## FALSE FALSElets check normality, outlinears visually first

#To see them all at once

layout(matrix(c(1,2,3,4),2,2)) # optional 4 graphs/page

plot(Linear.Model)

Residuals vs fitted and sqaure-rooted residuals vs fitted

- should be Homoscedastistic (randomly distributed around 0)

- There is statistical test (in R, but not SPSS)

# Evaluate homoscedasticity

# non-constant error variance test

ncvTest(Linear.Model)## Non-constant Variance Score Test

## Variance formula: ~ fitted.values

## Chisquare = 6.705913, Df = 1, p = 0.0096094Q-Q plot

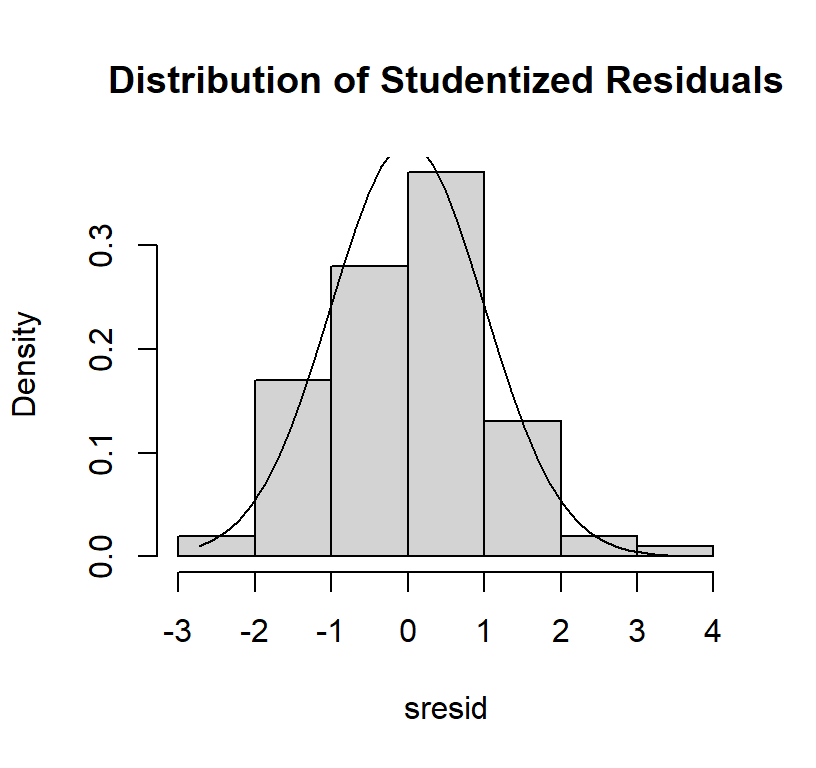

- should be a straight line

- you can also visualize the residuals as a histogram

# distribution of studentized residuals

sresid <- studres(Linear.Model)

hist(sresid, freq=FALSE,

main="Distribution of Studentized Residuals")

xfit<-seq(min(sresid),max(sresid),length=40)

yfit<-dnorm(xfit)

lines(xfit, yfit)

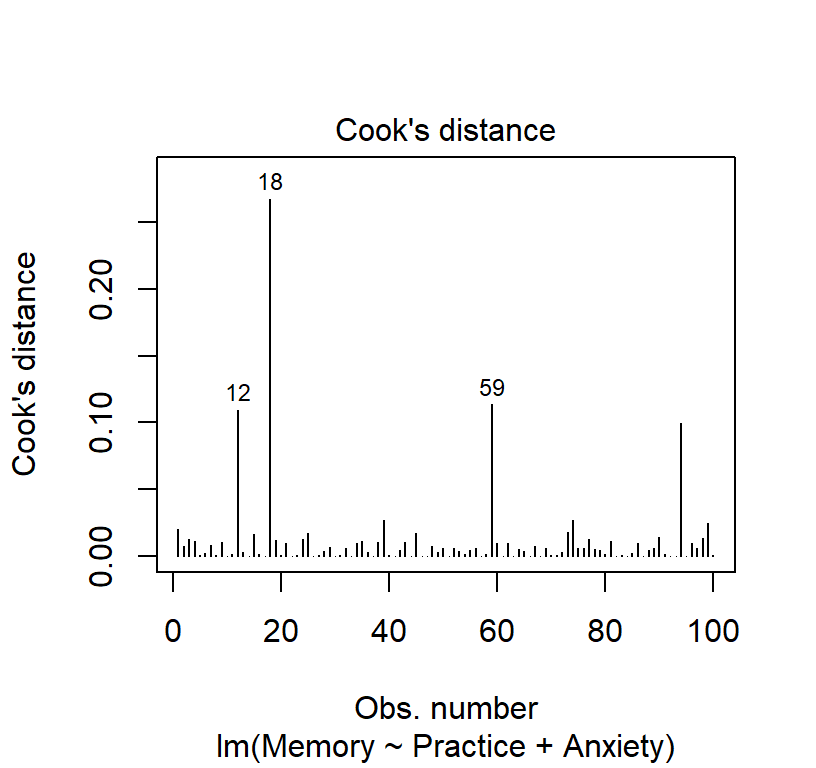

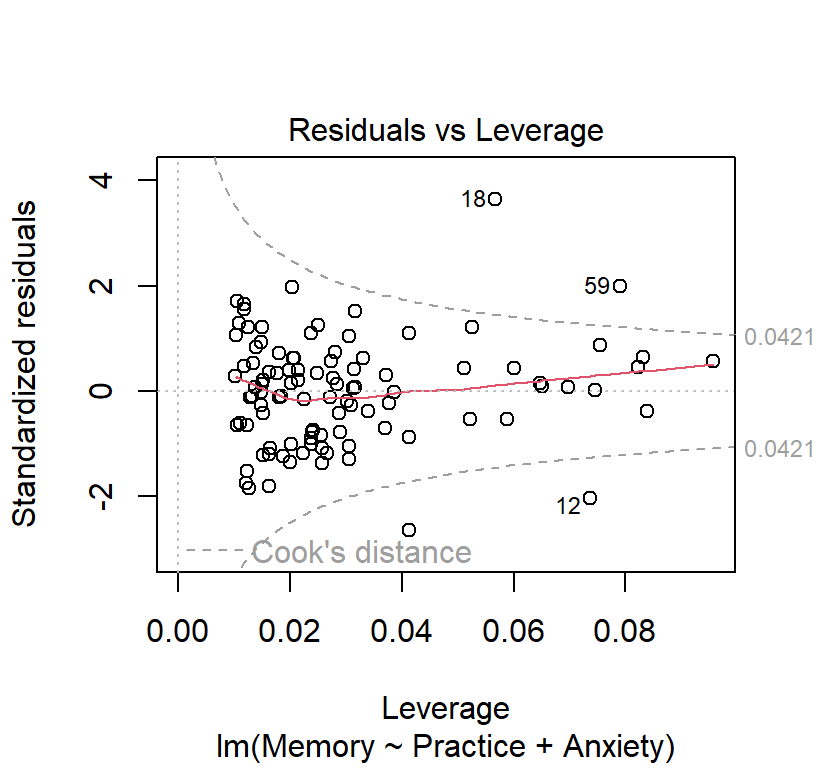

Cook’s distances

- Its possible for one or more observations to “pull” the regression line away from the trend in the rest of the data

- Cook’s Distance is one of several possible measures of influence.

- Cook’s D is the sum of the differences between the predicted value and the other predicted values in the data set, divided by the error variance times the number of parameters in the model.

- R did this automatically in plot 4 and we can see a few people with numbers (those are the outliers)

- We can also see the influence with a little R coding (set a more conservative threshold)

# Cook's D plot

# identify D values > 4/(n-k-1)

cutoff <- 4/((nrow(CorrDataT)-length(Linear.Model$coefficients)-2))

cutoff## [1] 0.04210526plot(Linear.Model, which=4, cook.levels=cutoff)

plot(Linear.Model, which=5, cook.levels=cutoff)

- Remove those with large Cooks’ Distances (if we want too)

CorrDataT$CooksD<-(cooks.distance(Linear.Model))

CorrDataT_Cleaned<-subset(CorrDataT,CooksD < cutoff)

nrow(CorrDataT_Cleaned)## [1] 96Another method of testing for influence

- Bonferonni p-value for most extreme obs

- Notice it gives different number of outliers

- Not all tests agree

# Assessing Outliers

outlierTest(Linear.Model) # Bonferonni p-value for most extreme obs## rstudent unadjusted p-value Bonferroni p

## 18 3.906991 0.00017396 0.017396Linearity and autocorrelation tests

- Green lines should show straight lines relative to the red dotted line

- The auto-correlation test should be non-significant (means that each residual is not correlated to the next residual)

# Evaluate Nonlinearity

# component + residual plot aka partial-residual plots

crPlots(Linear.Model)

# Test for Autocorrelated Errors

durbinWatsonTest(Linear.Model)## lag Autocorrelation D-W Statistic p-value

## 1 0.08884707 1.814168 0.356

## Alternative hypothesis: rho != 0

Save the results of modeling

- This lets you make a Doc file

library(texreg)

#htmlreg(list(Linear.Model),file = "ModelResults.doc",

# single.row = FALSE, stars = c(0.001, 0.01, 0.05,0.1),digits=3,

# inline.css = FALSE, doctype = TRUE, html.tag = TRUE,

# head.tag = TRUE, body.tag = TRUE)LS0tDQp0aXRsZTogIk11bHRpcGxlIFJlZ3Jlc3Npb24iDQpvdXRwdXQ6DQogIGh0bWxfZG9jdW1lbnQ6DQogICAgY29kZV9kb3dubG9hZDogeWVzDQogICAgZm9udHNpemU6IDhwdA0KICAgIGhpZ2hsaWdodDogdGV4dG1hdGUNCiAgICBudW1iZXJfc2VjdGlvbnM6IG5vDQogICAgdGhlbWU6IGZsYXRseQ0KICAgIHRvYzogeWVzDQogICAgdG9jX2Zsb2F0Og0KICAgICAgY29sbGFwc2VkOiBubw0KLS0tDQoNCg0KYGBge3IsIGVjaG89RkFMU0UsIHdhcm5pbmc9RkFMU0V9DQojc2V0d2QoJ0M6L1dlYnNpdGUvV2Vic2l0ZSBEZWMnKQ0KYGBgDQoNCmBgYHtyIHNldHVwLCBpbmNsdWRlPUZBTFNFfQ0KIyBzZXR1cCBmb3IgUm5vdGVib29rcw0Ka25pdHI6Om9wdHNfY2h1bmskc2V0KGVjaG8gPSBUUlVFKSAjU2hvdyBhbGwgc2NyaXB0IGJ5IGRlZmF1bHQNCmtuaXRyOjpvcHRzX2NodW5rJHNldChtZXNzYWdlID0gRkFMU0UpICNoaWRlIG1lc3NhZ2VzIA0Ka25pdHI6Om9wdHNfY2h1bmskc2V0KHdhcm5pbmcgPSAgRkFMU0UpICNoaWRlIHBhY2thZ2Ugd2FybmluZ3MgDQprbml0cjo6b3B0c19jaHVuayRzZXQoZmlnLndpZHRoPTQuMjUpICNTZXQgZGVmYXVsdCBmaWd1cmUgc2l6ZXMNCmtuaXRyOjpvcHRzX2NodW5rJHNldChmaWcuaGVpZ2h0PTQuMCkgI1NldCBkZWZhdWx0IGZpZ3VyZSBzaXplcw0Ka25pdHI6Om9wdHNfY2h1bmskc2V0KGZpZy5hbGlnbj0nY2VudGVyJykgI1NldCBkZWZhdWx0IGZpZ3VyZQ0Ka25pdHI6Om9wdHNfY2h1bmskc2V0KGZpZy5zaG93ID0gImhvbGQiKSAjU2V0IGRlZmF1bHQgZmlndXJlDQpgYGANCg0KXHBhZ2VicmVhaw0KDQojIENhdXNhbCBNb2RlbGluZw0KLSBYIGNhdXNlcyBZLiBFYXRpbmcgdG9vIG1hbnkgY29va2llcyB3aWxsIG1ha2UgeW91IGdhaW4gd2VpZ2h0DQotIFdlIG5lZWQgdG8gbWFrZSBzdXJlIHRoYXQgdGhlIGNhdXNlIGlzIG5vdCBzcHVyaW91cy4NCiAgICAtIENhdXNhbCBQYXRoIGFuYWx5c2lzIA0KICAgICAgICAtIERpcmVjdCBhbmQgSW5kaXJlY3QgRWZmZWN0cyANCiAgICAgICAgICAgIC0gRGlyZWN0OiBYMSAtPiBZICh3aGljaCBjYW4gY29udHJvbCBmb3IgWDIpDQogICAgICAgICAgICAtIEluZGlyZWN0IFgxIC0+IFgyIC0+IFkNCg0KIyMgUGFydGlhbCBSZWR1bmRhbmN5IChtb3N0IGNvbW1vbiBpc3N1ZSkNCi0gVGhlcmUgYXJlIGEgZmV3IHBvc3NpYmlsaXRpZXMgIA0KDQohW01vZGVsIEFdKFJlZ3Jlc3Npb25DbGFzcy9Nb2RlbEEucG5nKQ0KXA0KDQoNCi0gQm90aCBYMSBhbmQgWDIgYXJlIGNhdXNpbmcgWQ0KDQoNCiFbTW9kZWwgQl0oUmVncmVzc2lvbkNsYXNzL01vZGVsQi5wbmcpDQpcDQoNCg0KLSBYMSBhbmQgWDIgYXJlIGNhdXNpbmcgWSwgYnV0IFgxIGlzIGNhdXNpbmcgWDIgdGh1cyBtYWtpbmcgWDIgaW5kaXJlY3QgYXMgd2VsbA0KDQotIEJvdGggb2NjdXIgd2hlbjogJHJfe1kxfSA+IHJfe1kyfXJfezEyfSQgJiAkcl97WTJ9ID4gcl97WTF9cl97MTJ9JA0KLSBIYXJkIHRvIGtub3cgd2hldGhlciB5b3UgaGF2ZSB0aGUgTW9kZWwgQSBvciBCDQoNCiMjIyBCYWNrIHRvIG91ciBsYXN0IHdlZWtzIGV4YW1wbGUNCi0gUHJhY3RpY2UgVGltZSAoWDEpDQotIFBlcmZvcm1hbmNlIEFueGlldHkgKFgyKQ0KLSBNZW1vcnkgRXJyb3JzIChZKQ0KDQpWYXJpYWJsZSAgICAgICAgICAgICAgICAgfCAgUHJhY3RpY2UgVGltZSAoWDEpIHwgUGVyZm9ybWFuY2UgQW54aWV0eSAoWDIpIHwgTWVtb3J5IEVycm9ycyAoWSkgDQotLS0tLS0tLS0tLS0tLS0tLS0tLS0tLS0tfC0tLS0tLS0tLS0tLS0tLS0tLS0tLXwtLS0tLS0tLS0tLS0tLS0tLS0tLS0tLS0tLXwtLS0tLS0tLS0tLS0tLS0tLS0tDQpQcmFjdGljZSBUaW1lIChYMSkgICAgICAgfCAgICAgICAgICAgMSAgICAgICAgIHwgICAgICAgICAgLjMgICAgICAgICAgICAgIHwgICAgIC42ICAgICAgICANClBlcmZvcm1hbmNlIEFueGlldHkgKFgyKSB8ICAgICAgICAgICAuMyAgICAgICAgfCAgICAgICAgICAxICAgICAgICAgICAgICAgfCAgICAgLjQgICAgICAgIA0KTWVtb3J5IEVycm9ycyAoWSkgICAgICAgIHwgICAgICAgICAgIC42ICAgICAgICB8ICAgICAgICAgIC40ICAgICAgICAgICAgICB8ICAgICAxICAgICAgICAgDQoNCi0gTW9kZWwgd2UgdXNlZCB3YXMgTWVtb3J5IChZKSB+IFNsb3BlIFByYWN0aWNlKFgxKSArIFNsb3BlIEFueGlldHkgKFgyKSArIEludGVyY2VwdA0KDQpgYGB7ciB9DQpsaWJyYXJ5KE1BU1MpICNjcmVhdGUgZGF0YQ0KcHkxID0uNiAjQ29yIGJldHdlZW4gWDEgKFByYWN0aWNlIFRpbWUpIGFuZCBNZW1vcnkgRXJyb3JzDQpweTIgPS40ICNDb3IgYmV0d2VlbiBYMiAoUGVyZm9ybWFuY2UgQW54aWV0eSkgYW5kIE1lbW9yeSBFcnJvcnMNCnAxMj0gLjMgI0NvciBiZXR3ZWVuIFgxIChQcmFjdGljZSBUaW1lKSBhbmQgWDIgKFBlcmZvcm1hbmNlIEFueGlldHkpDQpNZWFucy5YMVgyWTwtIGMoMTAsMTAsMTApICNzZXQgdGhlIG1lYW5zIG9mIFggYW5kIFkgdmFyaWFibGVzDQpDb3ZNYXRyaXguWDFYMlkgPC0gbWF0cml4KGMoMSxwMTIscHkxLA0KICAgICAgICAgICAgICAgICAgICAgICAgICAgIHAxMiwxLHB5MiwNCiAgICAgICAgICAgICAgICAgICAgICAgICAgICBweTEscHkyLDEpLDMsMykgIyBjcmVhdGVzIHRoZSBjb3ZhcmlhdGUgbWF0cml4IA0Kc2V0LnNlZWQoNDIpDQpDb3JyRGF0YVQ8LW12cm5vcm0obj0xMDAsIG11PU1lYW5zLlgxWDJZLFNpZ21hPUNvdk1hdHJpeC5YMVgyWSwgZW1waXJpY2FsPVRSVUUpDQojQ29udmVydCB0aGVtIHRvIGEgIkRhdGEuRnJhbWUiICYgYWRkIG91ciBsYWJlbHMgdG8gdGhlIHZlY3RvcnMgd2UgY3JlYXRlZA0KQ29yckRhdGFUPC1hcy5kYXRhLmZyYW1lKENvcnJEYXRhVCkNCmNvbG5hbWVzKENvcnJEYXRhVCkgPC0gYygiUHJhY3RpY2UiLCJBbnhpZXR5IiwiTWVtb3J5IikNCmBgYA0KDQotIFVzZSB0aGUgKipzdGFyZ2F6ZXIqKiBwYWNrYWdlIHRvIHNlZSB0aGUgbW9kZWxzIHNpZGUgYnkgc2lkZQ0KLSBDaGFuZ2UgbGF0ZXggYmVsb3cgdG8gdGV4dCB3aGVuIHlvdSB3YW50IHRvIHZpZXcgdGhlIHJlc3VsdHMgcmlnaHQgaW4gUg0KDQpgYGB7ciwgLHJlc3VsdHM9J2FzaXMnfQ0KbGlicmFyeShzdGFyZ2F6ZXIpDQojIyMjIyMjIyMjIyMjIyNNb2RlbCAxIA0KTW9kZWwuMTwtbG0oTWVtb3J5fiBQcmFjdGljZSwgZGF0YSA9IENvcnJEYXRhVCkNCiMjIyMjIyMjIyMjIyMjI01vZGVsIDINCk1vZGVsLjI8LWxtKE1lbW9yeX4gUHJhY3RpY2UrQW54aWV0eSwgZGF0YSA9IENvcnJEYXRhVCkNCnN0YXJnYXplcihNb2RlbC4xLE1vZGVsLjIsdHlwZT0ibGF0ZXgiLA0KICAgICAgICAgIGludGVyY2VwdC5ib3R0b20gPSBGQUxTRSwgc2luZ2xlLnJvdz1UUlVFLCANCiAgICAgICAgICBzdGFyLmN1dG9mZnM9YyguMDUsLjAxLC4wMDEpLCBub3Rlcy5hcHBlbmQgPSBGQUxTRSwNCiAgICAgICAgICBoZWFkZXI9RkFMU0UpDQpgYGANCg0KXHBhZ2VicmVhaw0KDQojIyMgUGxvdHMNCi0gTm93IHBpbG90aW5nIGl0IGEgYml0IG1vcmUgY29tcGxleCBhcyB3ZSBoYXZlIHR3byBwcmVkaWN0b3JzDQotIFNpbXBsZSBzY2F0dGVyIHBsb3RzIGFyZSBub3Qgc2VtaS1wYXJ0aWFsZWQNCi0gQWxzbyB3aGlsZSB3ZSBhcmUgbm90IGludGVyYWN0aW5nIHRoZSB0d28gdmFyaWFibGVzLCBlYWNoIGhhcyBhbiBlZmZlY3Qgb24gdGhlIERWDQotIFdlIG11c3QgdmlzdWFsaXplIGJvdGggZWZmZWN0cw0KLSBUaGVyZSBhcmUgZGlmZmVyZW50IHN1Z2dlc3Rpb25zIG9uIGhvdyB0byBkbyB0aGlzIFt3ZSB3aWxsIGNvbWUgYmFjayB0byBkaWZmZXJlbmNlcyBpbiBwbG90dGluZyBsYXRlcl0NCg0KYGBge3IsIG91dC53aWR0aD0nLjQ5XFxsaW5ld2lkdGgnLCBmaWcud2lkdGg9My4yNSwgZmlnLmhlaWdodD0zLjI1LGZpZy5zaG93PSdob2xkJyxmaWcuYWxpZ249J2NlbnRlcid9DQpsaWJyYXJ5KGVmZmVjdHMpDQojcGxvdCBpbmRpdmlkdWFsIGVmZmVjdHMNCk1vZGVsLjIuUGxvdCA8LSBhbGxFZmZlY3RzKE1vZGVsLjIsIA0KICAgICAgICAgICAgICAgICAgICAgICAgICAgeGxldmVscz1saXN0KFByYWN0aWNlPXNlcSg4LCAxMiwgMSksDQogICAgICAgICAgICAgICAgICAgICAgICAgICAgICAgICAgICAgICAgQW54aWV0eT1zZXEoOCwgMTIsIDEpKSkNCg0KcGxvdChNb2RlbC4yLlBsb3QsICdQcmFjdGljZScsIHlsYWI9Ik1lbW9yeSIpDQpwbG90KE1vZGVsLjIuUGxvdCwgJ0FueGlldHknLCB5bGFiPSJNZW1vcnkiKQ0KYGBgDQoNCiMjIEZ1bGx5IEluZHJlY3QNCg0KIVtNb2RlbCBEXShSZWdyZXNzaW9uQ2xhc3MvSW5kaXJlY3QucG5nKQ0KXA0KDQoNCi0gTm90IHNwdXJpb3VzLCBidXQgWDEgaXMgbm90IGRpcmVjdCB0byBZIChYMiBpcyBpbnRlcnZlbmluZyBjb21wbGV0ZWx5KQ0KDQoNCiMjIEZ1bGwgUmVkdW5kYW5jeSAoU3B1cmlvdXMgbW9kZWxzKQ0KDQohW01vZGVsIENdKFJlZ3Jlc3Npb25DbGFzcy9Nb2RlbEMxLnBuZyl7IHdpZHRoPTgwJSB9DQpcDQoNCg0KIVtNb2RlbCBDXShSZWdyZXNzaW9uQ2xhc3MvTW9kZWxDMi5wbmcpeyB3aWR0aD04MCUgfQ0KXA0KDQoNCi0gSGFwcGVucyB3aGVuLCAkcl97WTJ9IFxhcHByb3ggcl97WTF9cl97MTJ9JA0KLSBYMSBpcyBjb25mb3VuZGVkIGluIFgyIA0KICAgIC0gWDEgYW5kIFgyIGFyZSByZWR1bmRhbnQNCg0KIyMjIEV4YW1wbGUgb2YgRnVsbCBSZWR1bmRhbmN5DQoNCmBgYHtyfQ0KSS5weTEgPS42ICNDb3IgYmV0d2VlbiBYMSAoV29ya2luZyBNZW1vcnkgRGlnaXQgU3BhbikgYW5kIE1lbW9yeSBFcnJvcnMNCkkucHkyID0uNiAjQ29yIGJldHdlZW4gWDIgKEF1ZGl0b3J5IFdvcmtpbmcgTWVtb3J5KSBhbmQgTWVtb3J5IEVycm9ycw0KSS5wMTIgPS45OTk5ICNDb3IgYmV0d2VlbiBYMSBXb3JraW5nIE1lbW9yeSBhbmQgQXVkaXRvcnkgV29ya2luZyBNZW1vcnkgDQoNCkkuTWVhbnMuWDFYMlk8LSBjKDEwLDEwLDEwKSAjc2V0IHRoZSBtZWFucyBvZiBYIGFuZCBZIHZhcmlhYmxlcw0KSS5Db3ZNYXRyaXguWDFYMlkgPC0gbWF0cml4KGMoMSxJLnAxMixJLnB5MSwNCiAgICAgICAgICAgICAgICAgICAgICAgICAgICBJLnAxMiwxLEkucHkyLA0KICAgICAgICAgICAgICAgICAgICAgICAgICAgIEkucHkxLEkucHkyLDEpLDMsMykgIyBjcmVhdGVzIHRoZSBjb3ZhcmlhdGUgbWF0cml4IA0Kc2V0LnNlZWQoNDIpDQpJRGF0YTwtbXZybm9ybShuPTEwMCwgbXU9SS5NZWFucy5YMVgyWSxTaWdtYT1JLkNvdk1hdHJpeC5YMVgyWSwgZW1waXJpY2FsPVRSVUUpDQpJRGF0YTwtYXMuZGF0YS5mcmFtZShJRGF0YSkNCiNsZXRzIGFkZCBvdXIgbGFiZWxzIHRvIHRoZSB2ZWN0b3JzIHdlIGNyZWF0ZWQNCmNvbG5hbWVzKElEYXRhKSA8LSBjKCJXTSIsIkFXTSIsIkVycm9ycyIpDQpgYGANCg0KYGBge3IsICxyZXN1bHRzPSdhc2lzJ30NCiMjIyMjIyMjIyMjIyMjI01vZGVsIDEgDQpTcHVyaW91cy4xPC1sbShFcnJvcnN+IFdNLCBkYXRhID0gSURhdGEpDQojIyMjIyMjIyMjIyMjIyNNb2RlbCAyIA0KU3B1cmlvdXMuMjwtbG0oRXJyb3JzfiBBV00sIGRhdGEgPSBJRGF0YSkNCiMjIyMjIyMjIyMjIyMjI01vZGVsIDMNClNwdXJpb3VzLjM8LWxtKEVycm9yc34gV00rQVdNLCBkYXRhID0gSURhdGEpDQpzdGFyZ2F6ZXIoU3B1cmlvdXMuMSxTcHVyaW91cy4yLFNwdXJpb3VzLjMsdHlwZT0ibGF0ZXgiLA0KICAgICAgICAgIGludGVyY2VwdC5ib3R0b20gPSBGQUxTRSwgc2luZ2xlLnJvdz1UUlVFLCANCiAgICAgICAgICBzdGFyLmN1dG9mZnM9YyguMDUsLjAxLC4wMDEpLCBub3Rlcy5hcHBlbmQgPSBGQUxTRSwNCiAgICAgICAgICBoZWFkZXI9RkFMU0UpDQpgYGANCg0KDQojIyBTdXBwcmVzc2lvbg0KLSBTdXBwcmVzc2lvbiBpcyBhIHN0cmFuZ2UgY2FzZSB3aGVyZSB0aGUgSVYgLT4gRFYgcmVsYXRpb25zaGlwIGlzIGhpZGRlbiAoc3VwcHJlc3NlZCkgYnkgYW5vdGhlciB2YXJpYWJsZQ0KLSBUYXggY3V0cyBjYXVzZSBncm93dGgsIHRheCBjdXRzIGNhdXNlIGluZmxhdGlvbi4gVGF4IGN1dHMgYWxvbmUgbWlnaHQgbG9vayArLCBidXQgYWRkIGluIGluZmxhdGlvbiBhbmQgbm93IHRheCBjdXRzIGNvdWxkIG1ha2UgY2F1c2UgdGhlIGdyb3d0aCB0byBsb29rIGRpZmZlcmVudA0KLSBPciB0aGUgdGhlIHN1cHByZXNzb3IgdmFyaWFibGUgY2FuIGNhdXNlIGEgZmxpcCBpbiB0aGUgc2lnbiBvZiB0aGUgcmVsYXRpb25zaGlwDQoNCiFbQ29oZW4ncyBFeGFtcGxlXShSZWdyZXNzaW9uQ2xhc3MvU3VwcHJlc3MucG5nKXsgd2lkdGg9ODAlIH0NClwNCg0KDQojIyMgSGlkZGVuIEVmZmVjdCBFeGFtcGxlIA0KDQpgYGB7cn0NCnN1cC5weTEgPSAgMi41ICNDb3ZhciBiZXR3ZWVuIHRheCBjdXRzIGFuZCBncm93dGggDQpzdXAucHkyID0gLTUuNSAjQ292YXIgYmV0d2VlbiBpbmZsYXRpb24gYW5kIGdyb3d0aCANCnN1cC5wMTIgPSAgNCAgI0NvdmFyIGJldHdlZW4gdGF4IGN1dHMgYW5kIGluZmxhdGlvbg0KU3VwcC5YMVgyWTwtIGMoNSw1LDUpICNzZXQgdGhlIG1lYW5zIG9mIFggYW5kIFkgdmFyaWFibGVzDQpTdXBwLkNvdk1hdHJpeC5YMVgyWSA8LSBtYXRyaXgoYygxMCxzdXAucDEyLHN1cC5weTEsDQogICAgICAgICAgICAgICAgICAgICAgICAgICAgICAgICBzdXAucDEyLDEwLHN1cC5weTIsDQogICAgICAgICAgICAgICAgICAgICAgICAgICAgICAgICBzdXAucHkxLHN1cC5weTIsMTApLDMsMykgIyBjcmVhdGVzIHRoZSBjb3ZhcmlhdGUgbWF0cml4IA0Kc2V0LnNlZWQoNDIpDQpTdXBwRGF0YTwtbXZybm9ybShuPTEwMCwgbXU9U3VwcC5YMVgyWSxTaWdtYT1TdXBwLkNvdk1hdHJpeC5YMVgyWSwgZW1waXJpY2FsPVRSVUUpDQpTdXBwRGF0YTwtYXMuZGF0YS5mcmFtZShTdXBwRGF0YSkNCmNvbG5hbWVzKFN1cHBEYXRhKSA8LSBjKCJUYXhDdXRzIiwiaW5mbGF0aW9uIiwiZ3Jvd3RoIikNCmBgYA0KDQpgYGB7ciwgLHJlc3VsdHM9J2FzaXMnfQ0KIyMjIyMjIyMjIyMjIyMjTW9kZWwgMSANClRheEN1dHNPbmx5PC0obG0oZ3Jvd3RofiBUYXhDdXRzLCBkYXRhID0gU3VwcERhdGEpKQ0KIyMjIyMjIyMjIyMjIyMjTW9kZWwgMg0KRnVsbC5Nb2RlbDwtbG0oZ3Jvd3RofiBUYXhDdXRzK2luZmxhdGlvbiwgZGF0YSA9IFN1cHBEYXRhKQ0Kc3RhcmdhemVyKFRheEN1dHNPbmx5LEZ1bGwuTW9kZWwsdHlwZT0ibGF0ZXgiLA0KICAgICAgICAgIGludGVyY2VwdC5ib3R0b20gPSBGQUxTRSwgc2luZ2xlLnJvdz1UUlVFLCANCiAgICAgICAgICBzdGFyLmN1dG9mZnM9YyguMDUsLjAxLC4wMDEpLCBub3Rlcy5hcHBlbmQgPSBGQUxTRSwNCiAgICAgICAgICBoZWFkZXI9RkFMU0UpDQpgYGANCg0KDQotIFlvdSB3aWxsIG5vdGljZSB0YXggY3V0cyBhbG9uZSwgYHIgcm91bmQoVGF4Q3V0c09ubHkkY29lZmZpY2llbnRzWzJdLDMpYCwgd2FzIGxvd2VyIHRoYW4gYHIgcm91bmQoRnVsbC5Nb2RlbCRjb2VmZmljaWVudHNbMl0sMylgIGNvbnRyb2xsaW5nIGZvciBpbmZsYXRpb24uIA0KLSBIb3dldmVyLCB5b3Ugd2lsbCBub3RpY2UgaW5mbGF0aW9uLGByIHJvdW5kKEZ1bGwuTW9kZWwkY29lZmZpY2llbnRzWzNdLDMpYCwgaGFzIGEgYmlnZ2VyIGVmZmVjdCAoYW5kIG5lZ2F0aXZlKSBlZmZlY3QgdGhhbiB0YXggY3V0cyBgciByb3VuZChGdWxsLk1vZGVsJGNvZWZmaWNpZW50c1syXSwzKWAgDQotIFNvIGluIG90aGVyIHdvcmRzLCB5ZXMgdGF4IGN1dHMgd29yayBidXQgdGhleSBkb24ndCBvdmVycmlkZSBpbmZsYXRpb24hIA0KDQojIyMgUGxvdCBwcmVkaWN0aW9ucw0KLSBBbHNvIGhvdyB5b3UgcGxvdCBkZXBlbmRzIG9uIHlvdXIgdGhlb3J5L2V4cGVyaW1lbnQvc3RvcnkNCi0gV2UgbmVlZCB0byBjb250cm9sIGZvciBpbmZsYXRpb24gKG9yIHZpZXcgdGF4IHNsb3BlIGF0IGRpZmZlcmVudCBsZXZlbHMgb2YgaW5mbGF0aW9uKQ0KLSBGaXJzdCBsZXRzIHZpZXcgdGhlIHRheCBzbG9wZSB3aXRob3V0IGNvbnRyb2xsaW5nIGZvciBpbmZsYXRpb24NCg0KYGBge3J9DQojcGxvdCBpbmRpdmlkdWFsIGVmZmVjdHMNClRheC5Nb2RlbC5QbG90IDwtIGFsbEVmZmVjdHMoVGF4Q3V0c09ubHksIA0KICAgICAgICAgICAgICAgICAgICAgICAgICAgICB4bGV2ZWxzPWxpc3QoVGF4Q3V0cz1zZXEoMywgNywgMSkpKQ0KcGxvdChUYXguTW9kZWwuUGxvdCwgJ1RheEN1dHMnLCB5bGFiPSJHcm93dGgiKQ0KYGBgDQoNCi0gTm93IGxldHMgdmlldyBpdCBjb250cm9sbGluZyBmb3IgaW5mbGF0aW9uDQoNCmBgYHtyLCBvdXQud2lkdGg9Jy40OVxcbGluZXdpZHRoJywgZmlnLndpZHRoPTMuMjUsIGZpZy5oZWlnaHQ9My4yNSxmaWcuc2hvdz0naG9sZCcsZmlnLmFsaWduPSdjZW50ZXInfQ0KI3Bsb3QgaW5kaXZpZHVhbCBlZmZlY3RzDQpGdWxsLk1vZGVsLlBsb3QgPC0gYWxsRWZmZWN0cyhGdWxsLk1vZGVsLCANCiAgICAgICAgICAgICAgICAgICAgICAgICAgICAgIHhsZXZlbHM9bGlzdChUYXhDdXRzPXNlcSgzLCA3LCAxKSwNCiAgICAgICAgICAgICAgICAgICAgICAgICAgICAgICAgICAgICAgICAgICBpbmZsYXRpb249c2VxKDMsIDcsIDEpKSkNCnBsb3QoRnVsbC5Nb2RlbC5QbG90LCAnVGF4Q3V0cycsIHlsYWI9Ikdyb3d0aCIpDQpwbG90KEZ1bGwuTW9kZWwuUGxvdCwgJ2luZmxhdGlvbicsIHlsYWI9Ikdyb3d0aCIpDQoNCiNwbG90IGJvdGggZWZmZWN0cw0KRnVsbC5Nb2RlbC5QbG90MjwtRWZmZWN0KGMoIlRheEN1dHMiLCAiaW5mbGF0aW9uIiksIEZ1bGwuTW9kZWwsDQogICAgICAgICAgICAgICAgICAgICAgICAgeGxldmVscz1saXN0KFRheEN1dHM9YygzLCA3KSwgDQogICAgICAgICAgICAgICAgICAgICAgICAgICAgICAgICAgICAgIGluZmxhdGlvbj1jKDMsNykpKQ0KcGxvdChGdWxsLk1vZGVsLlBsb3QyKQ0KYGBgDQoNCg0KIyBFc3RpbWF0aW5nIHBvcHVsYXRpb24gUi1zcXVhcmVkDQotICRccmhvXjIkIGlzIGVzdGltYXRlZCBieSBtdWx0aXBsZSAkUl4yJA0KLSBQcm9ibGVtOiBpbiBzbWFsbCBzYW1wbGVzIGNvcnJlbGF0aW9ucyBhcmUgdW5zdGFibGUgKGFuZCBpbmZsYXRlZCkNCi0gQWxzbywgYXMgd2UgYWRkIHByZWRpY3RvcnMsICRSXjIkIGdldHMgaW5mbGF0ZWQNCi0gU28gd2UgdXNlIGFuIGFkanVzdGVkIG11bHRpcGxlICRSXjIkDQotIEFkanVzdGVkICRSXjIgPSAxIC0gMS0gUl9ZXjIgXGZyYWN7bi0xfXtuLWstMX0kDQoNCiMgIE11bHRpcGxlIExpbmVhciBSZWdyZXNzaW9uIEFzc3VtcHRpb25zDQotIE11bHRpY29sbGluZWFyaXR5OiBQcmVkaWN0b3JzIGNhbm5vdCBiZSBmdWxseSAob3IgbmVhcmx5IGZ1bGx5KSByZWR1bmRhbnQgW2NoZWNrIHRoZSBjb3JyZWxhdGlvbnMgYmV0d2VlbiBwcmVkaWN0b3JzXQ0KLSBIb21vc2NlZGFzdGljaXR5IG9mIHJlc2lkdWFscyB0byBmaXR0ZWQgdmFsdWVzDQotIE5vcm1hbCBkaXN0cmlidXRpb24gb2YgcmVzaWR1YWxzDQotIEFic2VuY2Ugb2Ygb3V0bGllcnMNCi0gUmF0aW8gb2YgY2FzZXMgdG8gcHJlZGljdG9ycw0KICAgIC0gTnVtYmVyIG9mIGNhc2VzIG11c3QgZXhjZWVkIHRoZSBudW1iZXIgb2YgcHJlZGljdG9ycyANCiAgICAtIEJhcmVseSBhY2NlcHRhYmxlIG1pbmltdW06IDUgY2FzZXMgcGVyIHByZWRpY3Rvcg0KICAgIC0gUHJlZmVycmVkIG1pbmltdW06IDIwLTMwIGNhc2VzIHBlciBwcmVkaWN0b3INCi0gTGluZWFyaXR5IG9mIHByZWRpY3Rpb24NCi0gSW5kZXBlbmRlbmNlIChubyBhdXRvLWNvcnJlbGF0ZWQgZXJyb3JzKQ0KDQojIyBIb3cgdG8gY2hlY2sgYXNzdW1wdGlvbnM/DQotIEZpcnN0IGxldHMgZml0IHRoZSBtb2RlbA0KDQpgYGB7cn0NCkxpbmVhci5Nb2RlbDwtbG0oTWVtb3J5fiBQcmFjdGljZStBbnhpZXR5LCBkYXRhID0gQ29yckRhdGFUKQ0KYGBgDQoNCiMjIyBMZXRzIHRlc3QgZm9yIE11bHRpY29sbGluZWFyaXR5DQotIE11bHRpY29sbGluZWFyaXR5IGNhbiBiZSBkZXRlY3RlZCB3aGVuIHRoZSB2YXJpYW5jZSBvbiB0aGUgdGVybXMgeW91IGFyZSBpbnRlcmVzdGVkIGluIGJlY29tZSBpbmZsYXRlZA0KLSBTbyB3ZSBuZWVkIHRvIHRlc3QgdGhlIHZhcmlhbmNlIG9uIHRoZSBmYWN0b3JzLiANCi0gQmFzaWMgTG9naWNhbCBzdGVwIDEpIGdldCBWYXJpYW5jZSAgb24gcHJlZGljdG9yIChvbmx5IHRoYXQgdGVybSBpbiBtb2RlbCkgW1ZtaW5dDQotIEJhc2ljIExvZ2ljYWwgc3RlcCAyKSBnZXQgVmFyaWFuY2UgKFZtYXgpIG9uIHByZWRpY3RvciAoYWxsIG90aGVyIHRlcm1zIGluIG1vZGVsKSBbVm1heF0NCi0gQmFzaWMgTG9naWNhbCBzdGVwIDMpIHJhdGlvOiBWbWF4L1ZtaW4uIGlmIHRoZSB2YWx1ZSA+IDQgeW91IGhhdmUgYSBwcm9ibGVtDQotIHRoYXQncyBhbG90IG9mIHN0ZXBzLiBSIGNhbiBkbyBpdCBmb3IgeW91IGJhc2ljYWxseSB3aXRoIG9uZSBsaW5lIG9mIGNvZGUNCi0gVmFyaWFuY2UgaW5mbGF0aW9uIGZhY3RvcnMgKHZpZikNCg0KYGBge3J9DQpsaWJyYXJ5KGNhcikNCnZpZihMaW5lYXIuTW9kZWwpICMgdmFyaWFuY2UgaW5mbGF0aW9uIGZhY3RvcnMgDQp2aWYoTGluZWFyLk1vZGVsKSA+IDQgIyBwcm9ibGVtPw0KYGBgDQoNCiMjIyBsZXRzIGNoZWNrIG5vcm1hbGl0eSwgb3V0bGluZWFycyB2aXN1YWxseSBmaXJzdCANCmBgYHtyLGZpZy53aWR0aD03LjUsZmlnLmhlaWdodD01fQ0KI1RvIHNlZSB0aGVtIGFsbCBhdCBvbmNlDQpsYXlvdXQobWF0cml4KGMoMSwyLDMsNCksMiwyKSkgIyBvcHRpb25hbCA0IGdyYXBocy9wYWdlIA0KcGxvdChMaW5lYXIuTW9kZWwpDQpgYGANCg0KIyMjIFJlc2lkdWFscyB2cyBmaXR0ZWQgYW5kIHNxYXVyZS1yb290ZWQgcmVzaWR1YWxzIHZzIGZpdHRlZCANCi0gc2hvdWxkIGJlIEhvbW9zY2VkYXN0aXN0aWMgKHJhbmRvbWx5IGRpc3RyaWJ1dGVkIGFyb3VuZCAwKQ0KLSBUaGVyZSBpcyBzdGF0aXN0aWNhbCB0ZXN0IChpbiBSLCBidXQgbm90IFNQU1MpDQoNCmBgYHtyfQ0KIyBFdmFsdWF0ZSBob21vc2NlZGFzdGljaXR5DQojIG5vbi1jb25zdGFudCBlcnJvciB2YXJpYW5jZSB0ZXN0DQpuY3ZUZXN0KExpbmVhci5Nb2RlbCkNCmBgYA0KDQojIyMgUS1RIHBsb3QgDQotIHNob3VsZCBiZSBhIHN0cmFpZ2h0IGxpbmUNCi0geW91IGNhbiBhbHNvIHZpc3VhbGl6ZSB0aGUgcmVzaWR1YWxzIGFzIGEgaGlzdG9ncmFtDQoNCmBgYHtyfQ0KIyBkaXN0cmlidXRpb24gb2Ygc3R1ZGVudGl6ZWQgcmVzaWR1YWxzDQpzcmVzaWQgPC0gc3R1ZHJlcyhMaW5lYXIuTW9kZWwpIA0KaGlzdChzcmVzaWQsIGZyZXE9RkFMU0UsIA0KICAgICBtYWluPSJEaXN0cmlidXRpb24gb2YgU3R1ZGVudGl6ZWQgUmVzaWR1YWxzIikNCnhmaXQ8LXNlcShtaW4oc3Jlc2lkKSxtYXgoc3Jlc2lkKSxsZW5ndGg9NDApIA0KeWZpdDwtZG5vcm0oeGZpdCkgDQpsaW5lcyh4Zml0LCB5Zml0KQ0KYGBgDQoNCiMjIyBDb29rJ3MgZGlzdGFuY2VzDQotIEl0cyBwb3NzaWJsZSBmb3Igb25lIG9yIG1vcmUgb2JzZXJ2YXRpb25zIHRvICJwdWxsIiB0aGUgcmVncmVzc2lvbiBsaW5lIGF3YXkgZnJvbSB0aGUgdHJlbmQgaW4gdGhlIHJlc3Qgb2YgdGhlIGRhdGENCi0gQ29vaydzIERpc3RhbmNlIGlzIG9uZSBvZiBzZXZlcmFsIHBvc3NpYmxlIG1lYXN1cmVzIG9mIGluZmx1ZW5jZS4NCi0gQ29vaydzIEQgaXMgdGhlIHN1bSBvZiB0aGUgZGlmZmVyZW5jZXMgYmV0d2VlbiB0aGUgcHJlZGljdGVkIHZhbHVlIGFuZCB0aGUgb3RoZXIgcHJlZGljdGVkIHZhbHVlcyBpbiB0aGUgZGF0YSBzZXQsIGRpdmlkZWQgYnkgdGhlIGVycm9yIHZhcmlhbmNlIHRpbWVzIHRoZSBudW1iZXIgb2YgcGFyYW1ldGVycyBpbiB0aGUgbW9kZWwuIA0KLSBSIGRpZCB0aGlzIGF1dG9tYXRpY2FsbHkgaW4gcGxvdCA0IGFuZCB3ZSBjYW4gc2VlIGEgZmV3IHBlb3BsZSB3aXRoIG51bWJlcnMgKHRob3NlIGFyZSB0aGUgb3V0bGllcnMpDQotIFdlIGNhbiBhbHNvIHNlZSB0aGUgaW5mbHVlbmNlIHdpdGggYSBsaXR0bGUgUiBjb2RpbmcgKHNldCBhIG1vcmUgY29uc2VydmF0aXZlIHRocmVzaG9sZCkNCg0KYGBge3J9DQojIENvb2sncyBEIHBsb3QNCiMgaWRlbnRpZnkgRCB2YWx1ZXMgPiA0LyhuLWstMSkgDQpjdXRvZmYgPC0gNC8oKG5yb3coQ29yckRhdGFUKS1sZW5ndGgoTGluZWFyLk1vZGVsJGNvZWZmaWNpZW50cyktMikpIA0KY3V0b2ZmDQpwbG90KExpbmVhci5Nb2RlbCwgd2hpY2g9NCwgY29vay5sZXZlbHM9Y3V0b2ZmKQ0KcGxvdChMaW5lYXIuTW9kZWwsIHdoaWNoPTUsIGNvb2subGV2ZWxzPWN1dG9mZikNCmBgYA0KDQotIFJlbW92ZSB0aG9zZSB3aXRoIGxhcmdlIENvb2tzJyBEaXN0YW5jZXMgKGlmIHdlIHdhbnQgdG9vKQ0KYGBge3J9DQpDb3JyRGF0YVQkQ29va3NEPC0oY29va3MuZGlzdGFuY2UoTGluZWFyLk1vZGVsKSkNCkNvcnJEYXRhVF9DbGVhbmVkPC1zdWJzZXQoQ29yckRhdGFULENvb2tzRCA8IGN1dG9mZikNCm5yb3coQ29yckRhdGFUX0NsZWFuZWQpDQpgYGANCg0KIyMjIEFub3RoZXIgbWV0aG9kIG9mIHRlc3RpbmcgZm9yIGluZmx1ZW5jZQ0KLSBCb25mZXJvbm5pIHAtdmFsdWUgZm9yIG1vc3QgZXh0cmVtZSBvYnMNCi0gTm90aWNlIGl0IGdpdmVzIGRpZmZlcmVudCBudW1iZXIgb2Ygb3V0bGllcnMNCi0gTm90IGFsbCB0ZXN0cyBhZ3JlZQ0KDQpgYGB7cn0NCiMgQXNzZXNzaW5nIE91dGxpZXJzDQpvdXRsaWVyVGVzdChMaW5lYXIuTW9kZWwpICMgQm9uZmVyb25uaSBwLXZhbHVlIGZvciBtb3N0IGV4dHJlbWUgb2JzDQpgYGANCg0KIyMjIExpbmVhcml0eSBhbmQgYXV0b2NvcnJlbGF0aW9uIHRlc3RzDQotIEdyZWVuIGxpbmVzIHNob3VsZCBzaG93IHN0cmFpZ2h0IGxpbmVzIHJlbGF0aXZlIHRvIHRoZSByZWQgZG90dGVkIGxpbmUNCi0gVGhlIGF1dG8tY29ycmVsYXRpb24gdGVzdCBzaG91bGQgYmUgbm9uLXNpZ25pZmljYW50IChtZWFucyB0aGF0IGVhY2ggcmVzaWR1YWwgaXMgbm90IGNvcnJlbGF0ZWQgdG8gdGhlIG5leHQgcmVzaWR1YWwpDQoNCmBgYHtyLGZpZy53aWR0aD03LjUsZmlnLmhlaWdodD00fQ0KIyBFdmFsdWF0ZSBOb25saW5lYXJpdHkNCiMgY29tcG9uZW50ICsgcmVzaWR1YWwgcGxvdCBha2EgcGFydGlhbC1yZXNpZHVhbCBwbG90cw0KY3JQbG90cyhMaW5lYXIuTW9kZWwpDQojIFRlc3QgZm9yIEF1dG9jb3JyZWxhdGVkIEVycm9ycw0KZHVyYmluV2F0c29uVGVzdChMaW5lYXIuTW9kZWwpDQpgYGANCg0KIyBTYXZlIHRoZSByZXN1bHRzIG9mIG1vZGVsaW5nDQotIFRoaXMgbGV0cyB5b3UgbWFrZSBhIERvYyBmaWxlDQpgYGB7cn0NCmxpYnJhcnkodGV4cmVnKQ0KI2h0bWxyZWcobGlzdChMaW5lYXIuTW9kZWwpLGZpbGUgPSAiTW9kZWxSZXN1bHRzLmRvYyIsIA0KIyAgICAgICAgc2luZ2xlLnJvdyA9IEZBTFNFLCBzdGFycyA9IGMoMC4wMDEsIDAuMDEsIDAuMDUsMC4xKSxkaWdpdHM9MywNCiMgICAgICAgIGlubGluZS5jc3MgPSBGQUxTRSwgZG9jdHlwZSA9IFRSVUUsIGh0bWwudGFnID0gVFJVRSwgDQojICAgICAgICBoZWFkLnRhZyA9IFRSVUUsIGJvZHkudGFnID0gVFJVRSkNCmBgYA0KDQoNCjxzY3JpcHQ+DQogIChmdW5jdGlvbihpLHMsbyxnLHIsYSxtKXtpWydHb29nbGVBbmFseXRpY3NPYmplY3QnXT1yO2lbcl09aVtyXXx8ZnVuY3Rpb24oKXsNCiAgKGlbcl0ucT1pW3JdLnF8fFtdKS5wdXNoKGFyZ3VtZW50cyl9LGlbcl0ubD0xKm5ldyBEYXRlKCk7YT1zLmNyZWF0ZUVsZW1lbnQobyksDQogIG09cy5nZXRFbGVtZW50c0J5VGFnTmFtZShvKVswXTthLmFzeW5jPTE7YS5zcmM9ZzttLnBhcmVudE5vZGUuaW5zZXJ0QmVmb3JlKGEsbSkNCiAgfSkod2luZG93LGRvY3VtZW50LCdzY3JpcHQnLCdodHRwczovL3d3dy5nb29nbGUtYW5hbHl0aWNzLmNvbS9hbmFseXRpY3MuanMnLCdnYScpOw0KDQogIGdhKCdjcmVhdGUnLCAnVUEtOTA0MTUxNjAtMScsICdhdXRvJyk7DQogIGdhKCdzZW5kJywgJ3BhZ2V2aWV3Jyk7DQoNCjwvc2NyaXB0Pg0K